Empowering institutions to effective assess academic program utility and effectiveness

Empowering institutions to effective assess academic program utility and effectiveness

We expanded the map to include environmental and external factors. We noted the perceived pain points that the current program review process yields. This served as a great launching point - we had an idea of what themes we could delve into as we continued user interviews:

communication between administrators and contributors - those that actually write the report

contributors are not feeling empowered to write an insightful report via lack of access to data

User Interviews

My Product Manager and I conducted additional user interviews based on the assumptions and questions generated during our workshop. We interviewed 4 administrators from 4 different institutions. It was important to talk with users from a variety of institutions - we wanted to make sure we're solving for as many use cases as we can. Some example questions included:

Describe your program review process. What is the timeline? Who is involved?

What are your pain points for your program review process? Are there any challenges you have that come to mind?

Who needs to look at individual program review reports? Is there feedback provided to the programs? How is this feedback provided today?

I had already started sketching some ideas - translating the ideas we were seeing to features and functionality, but after the round of user interviews I had plenty to work off of.

I compiled a testing summary document that included test subject details, notes and quotes. Within the document, I took a pass at prioritizing next steps based on user feedback we saw during testing. Overall, most of the action items were "quick fixes" - the logic of our design was sound thanks to iteration, collaboration and consistent solicitation of feedback (always trust the process!).

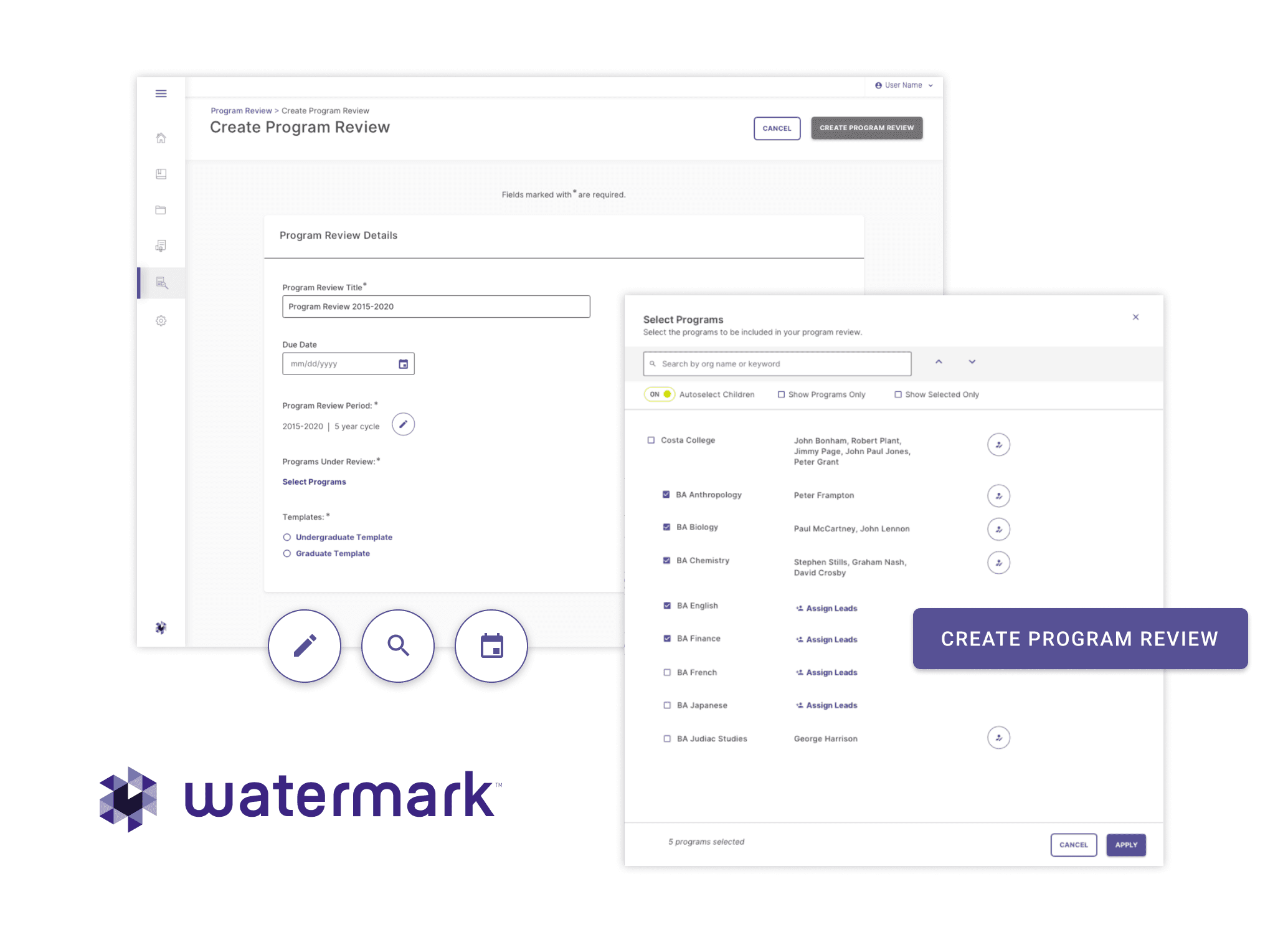

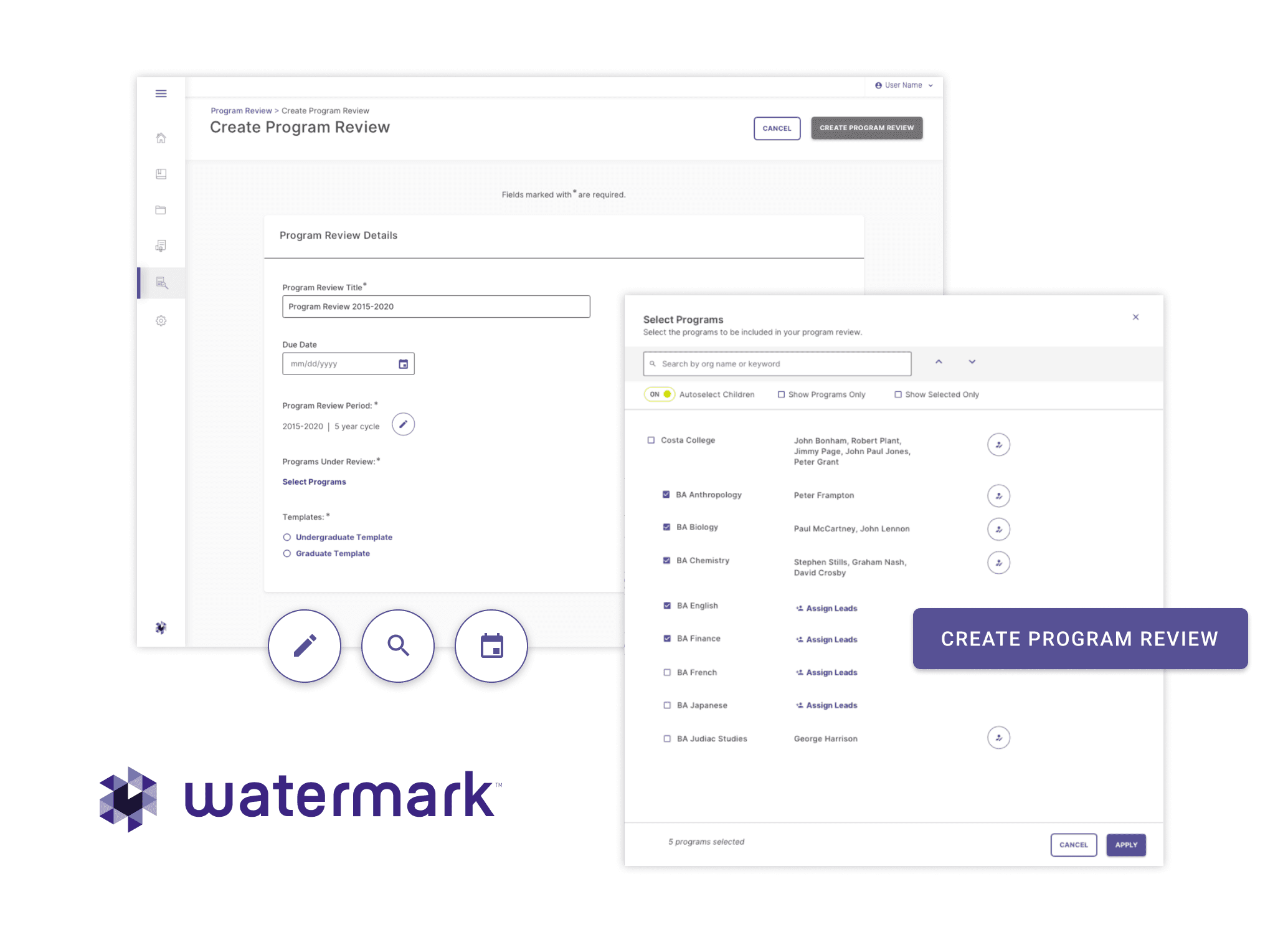

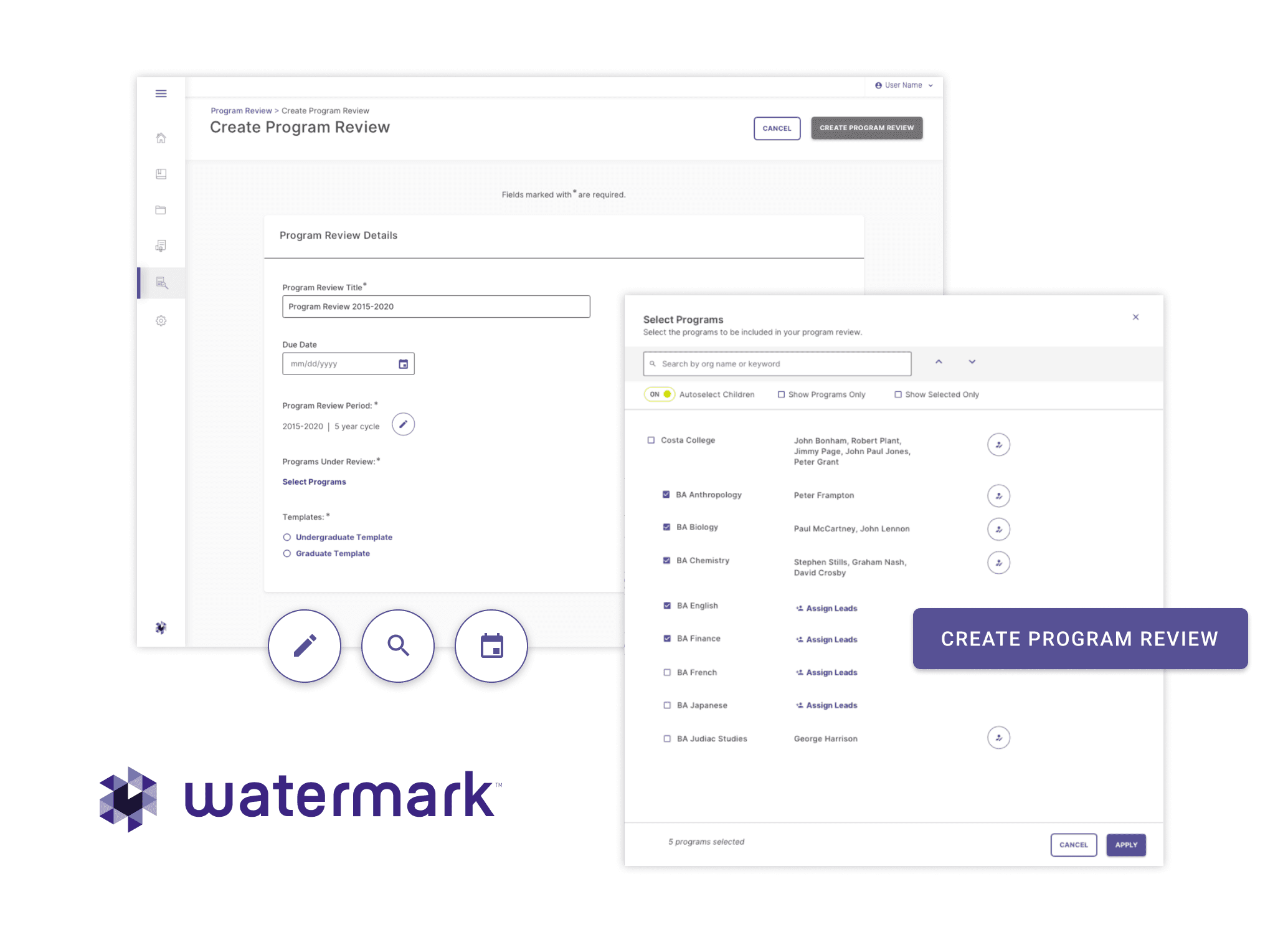

Below is a high-fidelity prototype of the improved program review set up flow, incorporating feedback from the beta tests.

The Opportunity

Program Review is a comprehensive evaluation of a program's status, effectiveness and progress. A robust program review practice is essential to retaining accreditation and ensuring the development of an institution's programs.

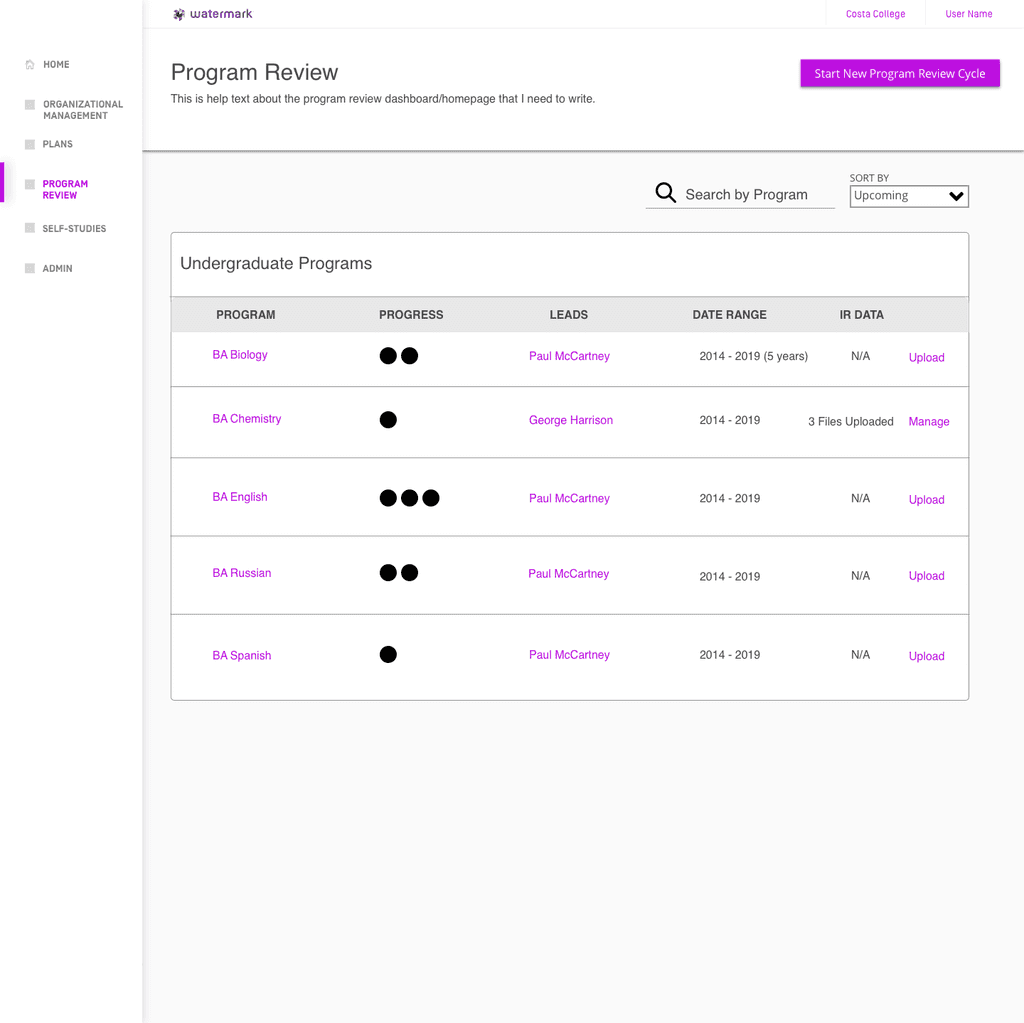

Institutions use a siloed, disjointed approach that leads to multiple pain points across the program review experience. Institutions are retrofitting inflexible software (such as Watermark's legacy assessment system) to fit their program review processes. Administrators have a difficult time tracking down faculty and monitoring progress. Faculty are using a number of different mediums to complete and submit their reports. These inefficiencies pose an opportunity to greatly improve the program review process by providing the following:

the ability for administrators to schedule, track and route program reviews throughout the project lifecycle

a workspace for faculty to complete the narrative report in-product

data integrations from other areas of Watermark's Education Intelligence System (EIS) to allow faculty to easily analyze and answer program review prompts

a repository for all evidence files - documents that support the narrative report

Team

Product Manager, Front-End Engineer, Back-End Engineer, Business Analyst

Role

Date

2019-2021

0 to 1

Product Design

Understanding Relevant Personas

The team kicked off the project with a 3 day workshop focused on mapping out the program review process and cultivating empathy for the users involved. Some preliminary user interviews had already been conducted (the project had been started then stopped due to business constraints before I joined the team). From those, we knew we were designing for two main user groups - an "administrator", those who set up and project manage the process and "contributor", faculty who actually write the narrative report.

Based on the user interviews, we drew up empathy maps to understand how their thoughts, feelings, and motivations relate to the program review process specifically.

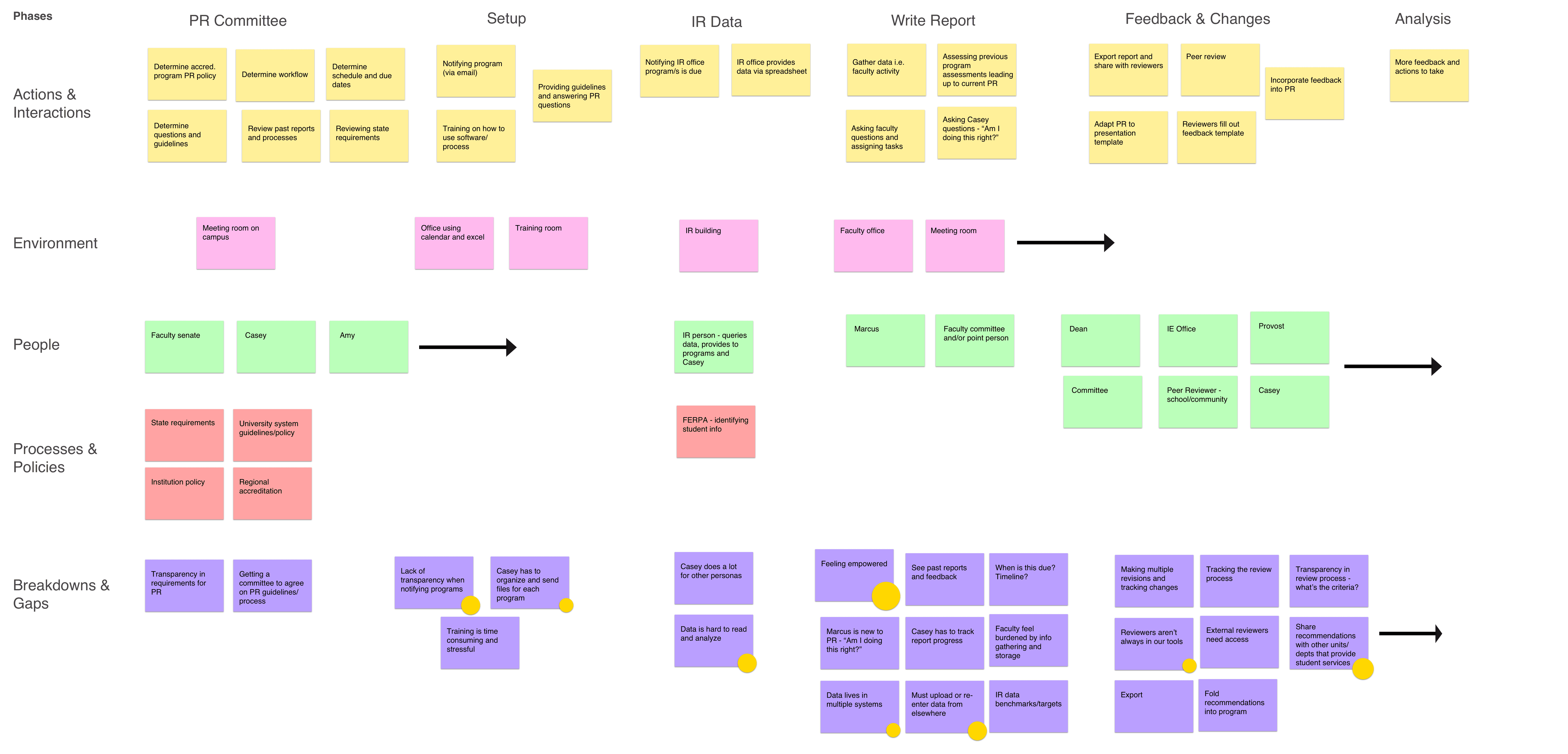

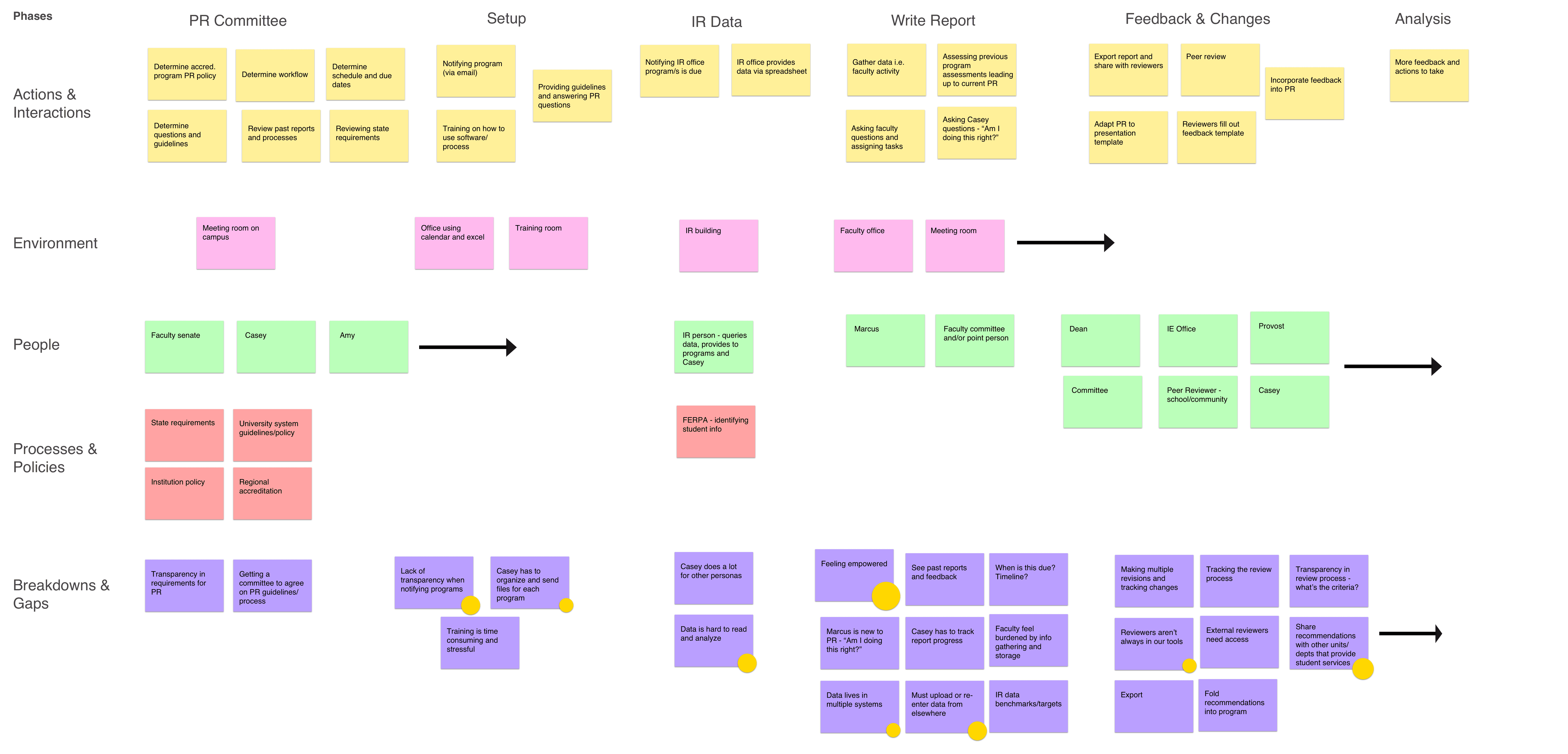

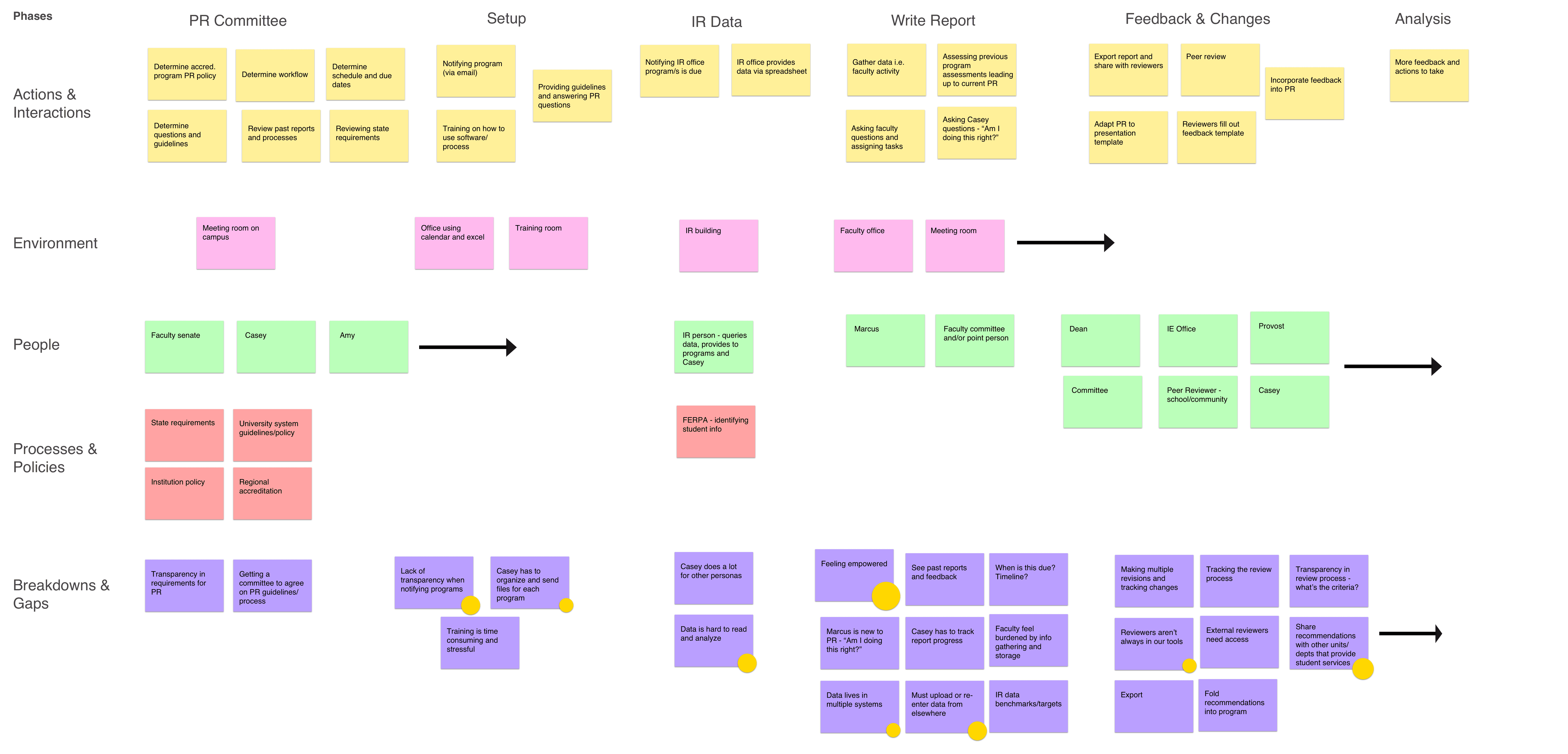

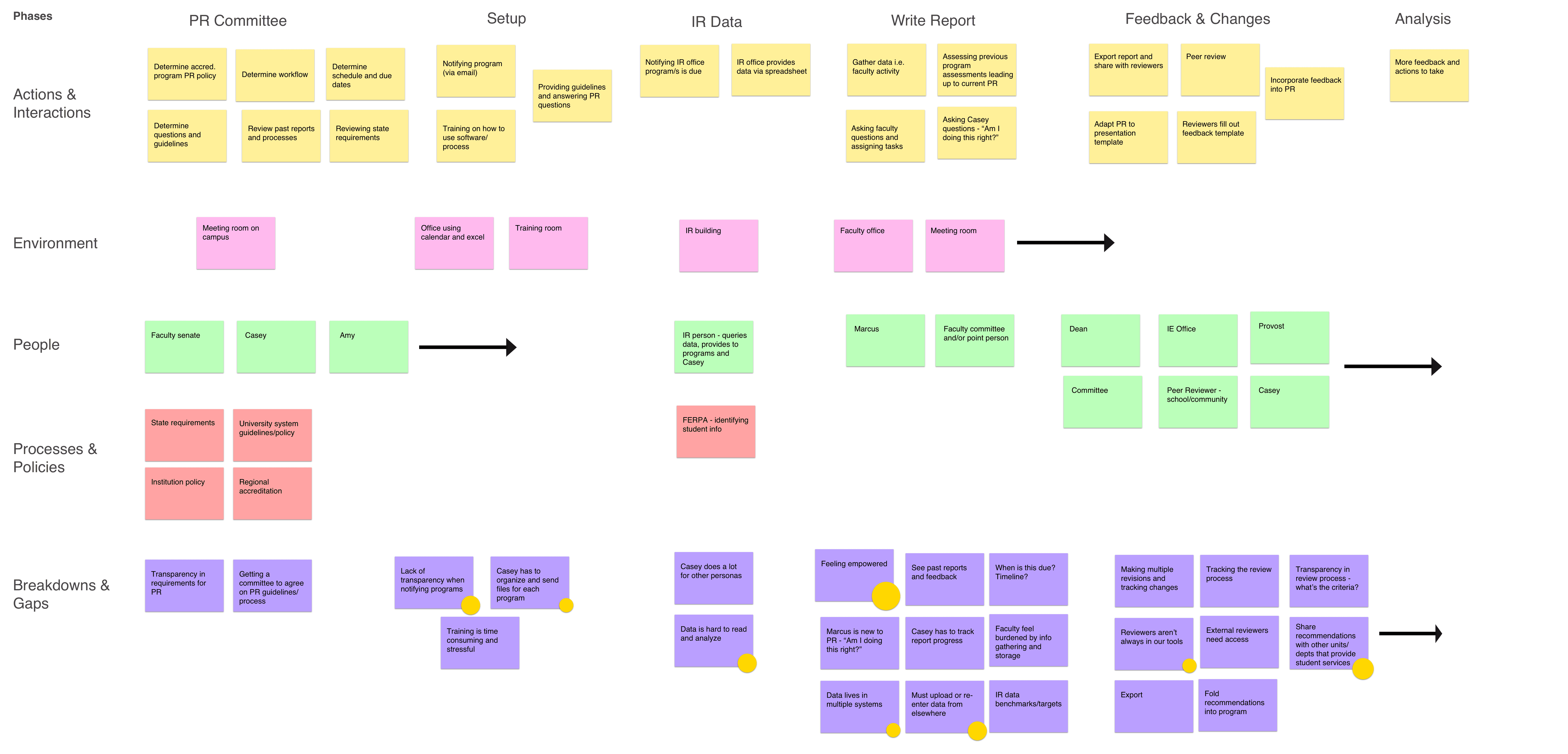

Mapping the User Journey

Using what we learned from the preliminary user interviews, we mapped out the journey of the administrator and contributor to get a comprehensive understanding of what their program review experience currently looks like, respectively. Visualizing the journey is helpful to establish understanding among project stakeholders as well as tease out further questions/assumptions.

Ideate and Iterate

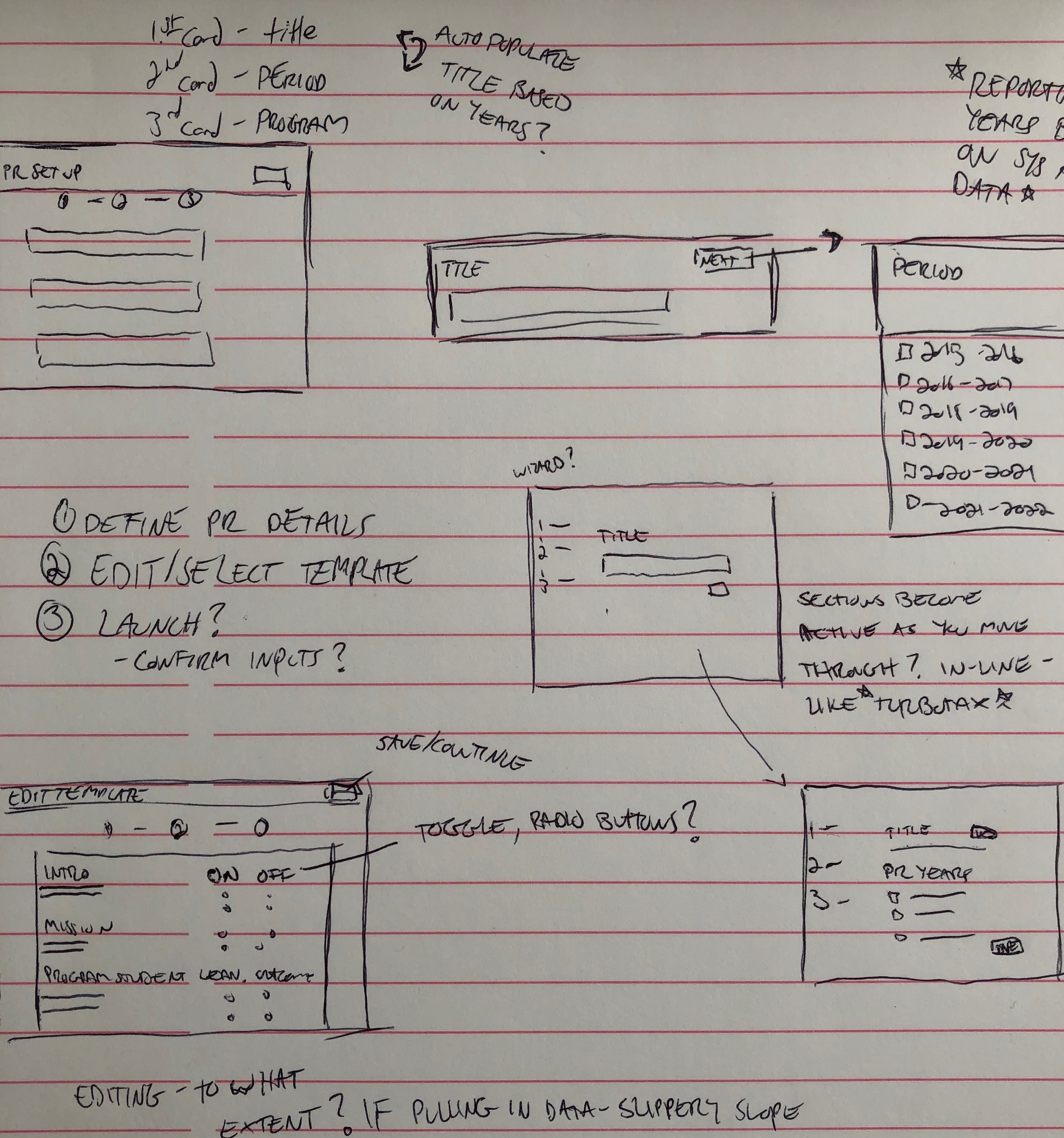

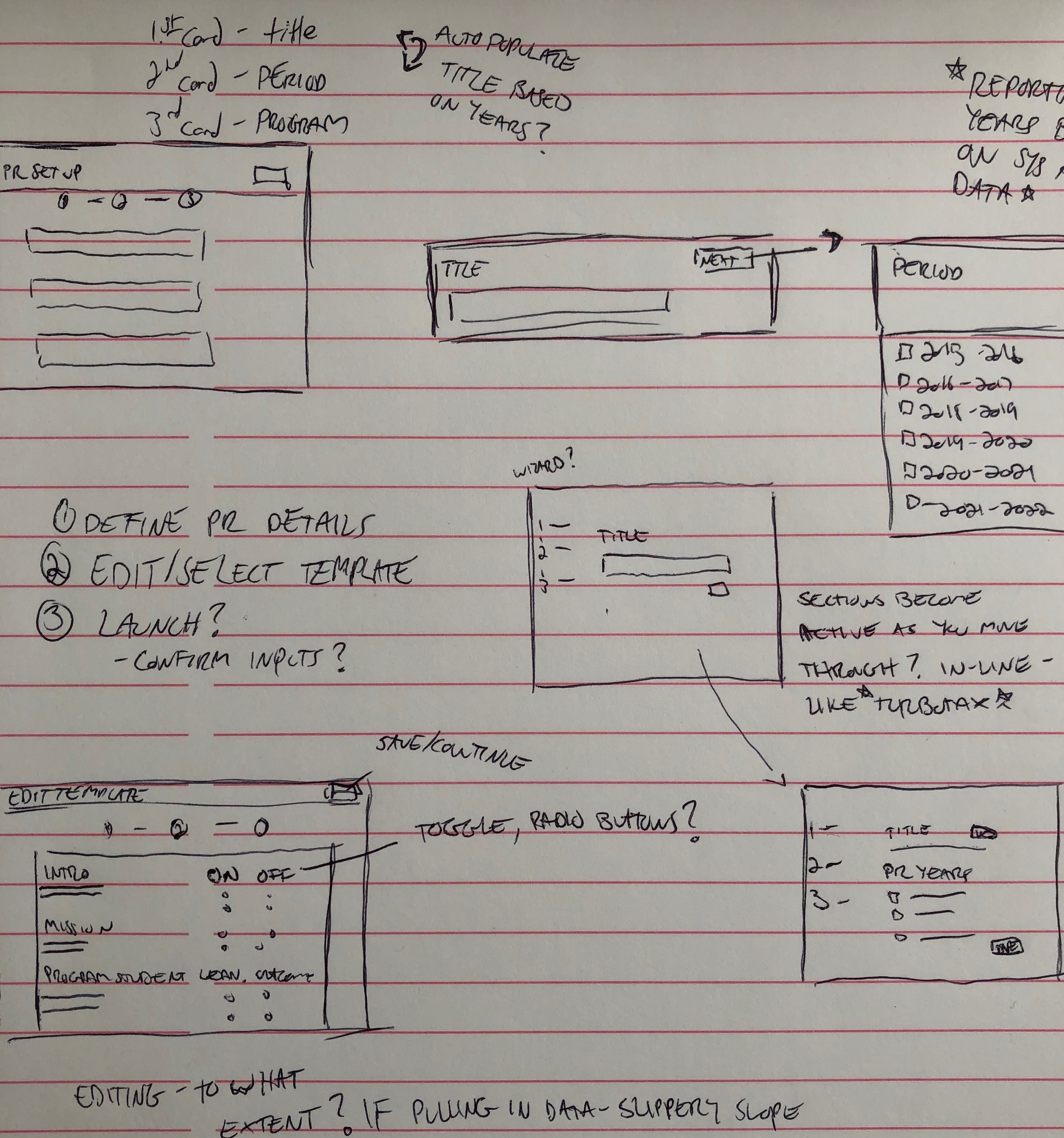

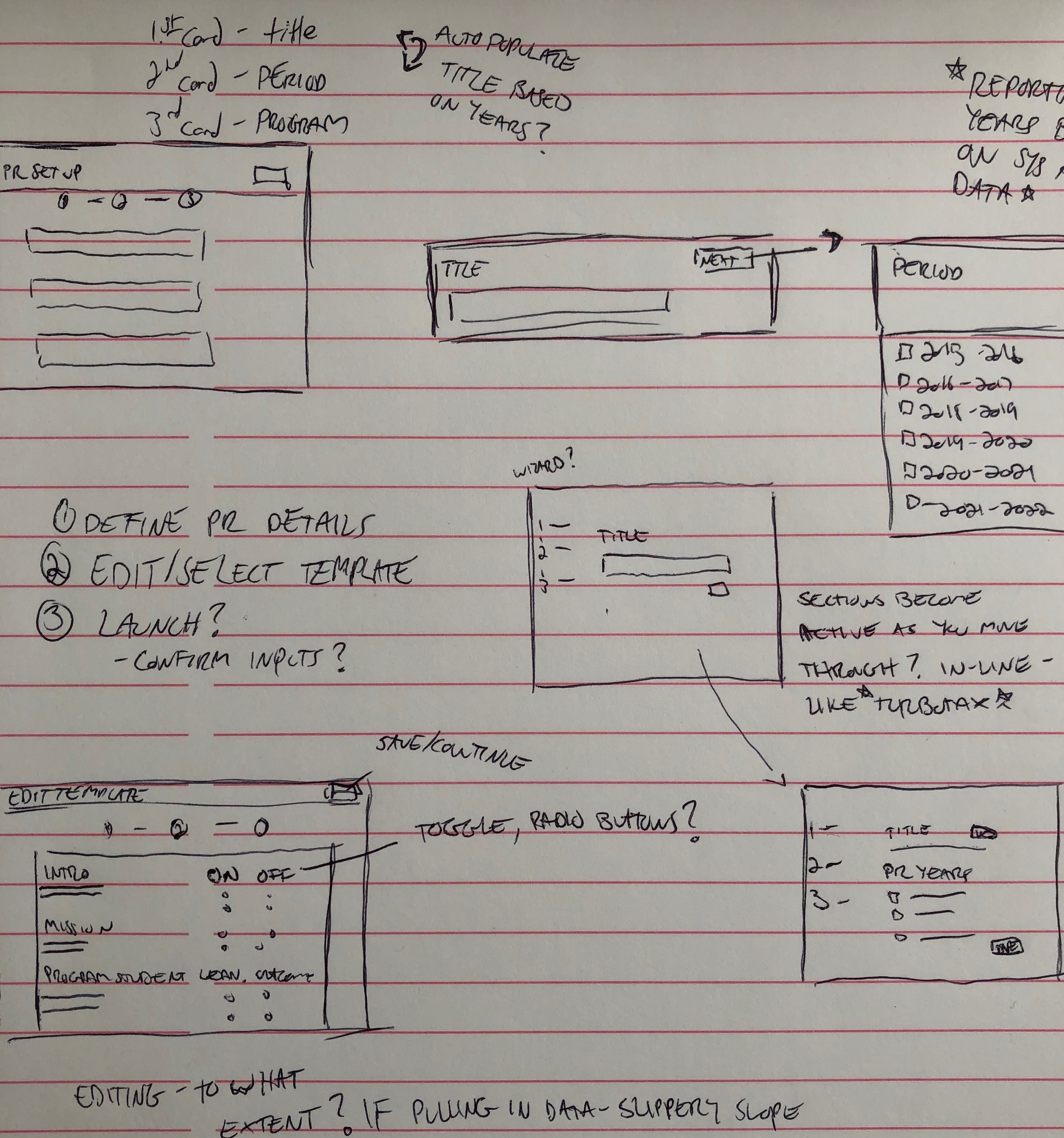

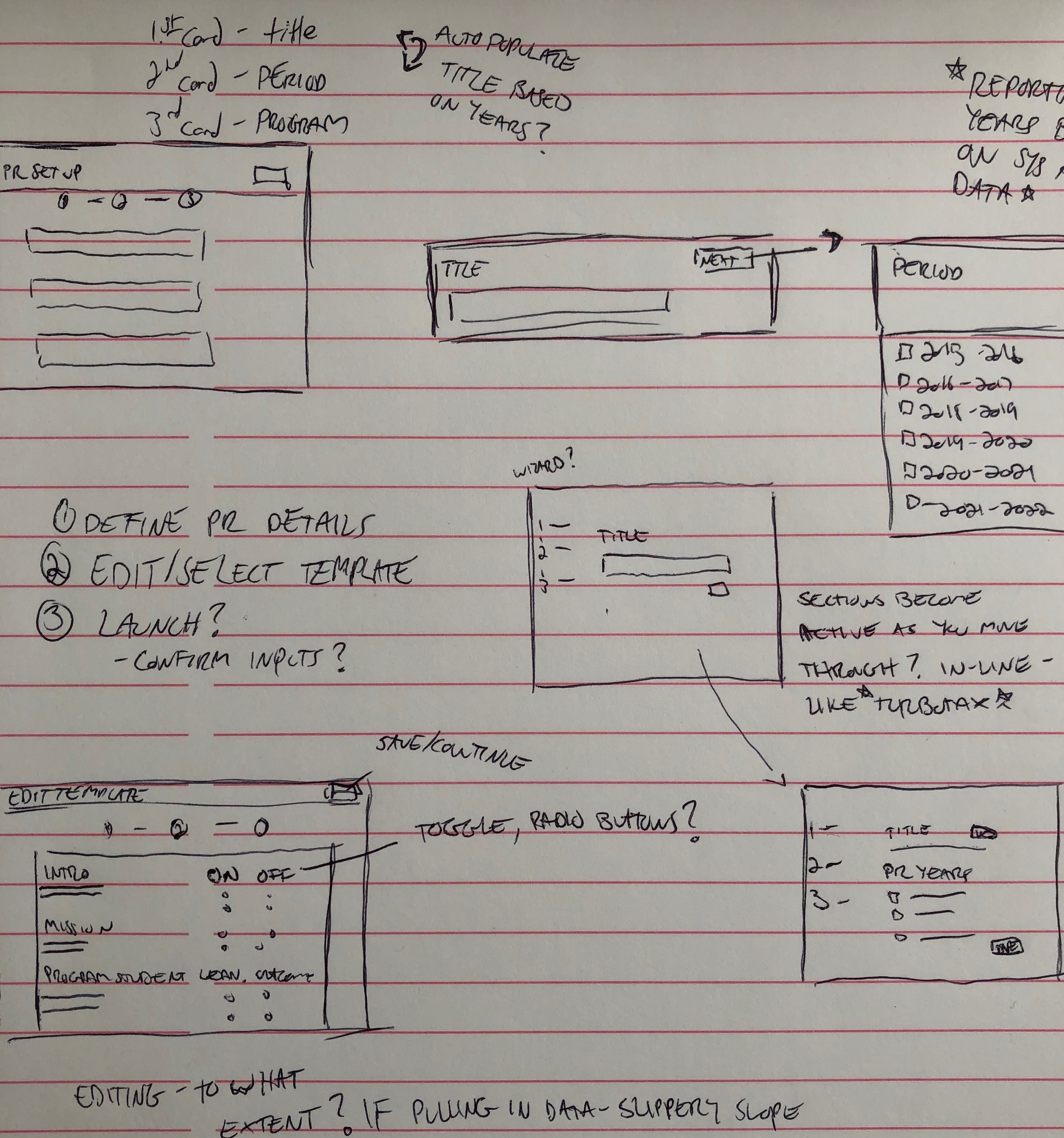

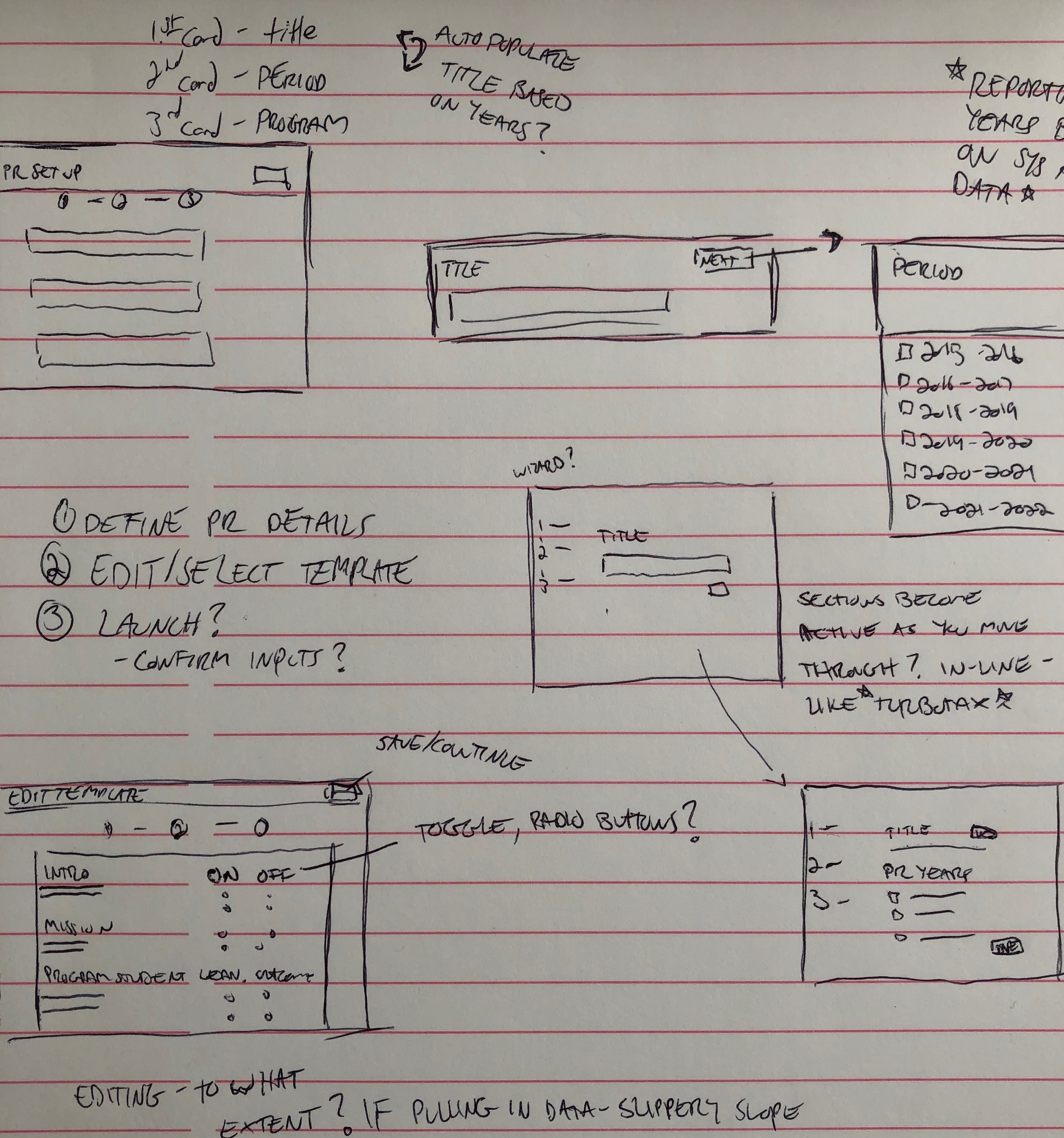

Sketching is my preferred way to get ideas down quickly. I'll often time box myself. For example, give myself 5 minutes to come up with as many ways as possible to solve a given problem. That way, I don't really have time to judge my ideas - it's useful in doing a brain dump.

I try to take a picture of sketches I think I can build off of and keep it in my sketch document as I wire and, eventually, move to high fidelity. Having the timeline of artifacts helps give context when communicating ideas to the team.

We expanded the map to include environmental and external factors. We noted the perceived pain points that the current program review process yields. This served as a great launching point - we had an idea of what themes we could delve into as we continued user interviews:

communication between administrators and contributors - those that actually write the report

contributors are not feeling empowered to write an insightful report via lack of access to data

We expanded the map to include environmental and external factors. We noted the perceived pain points that the current program review process yields. This served as a great launching point - we had an idea of what themes we could delve into as we continued user interviews:

communication between administrators and contributors - those that actually write the report

contributors are not feeling empowered to write an insightful report via lack of access to data

User Interviews

My Product Manager and I conducted additional user interviews based on the assumptions and questions generated during our workshop. We interviewed 4 administrators from 4 different institutions. It was important to talk with users from a variety of institutions - we wanted to make sure we're solving for as many use cases as we can. Some example questions included:

Describe your program review process. What is the timeline? Who is involved?

What are your pain points for your program review process? Are there any challenges you have that come to mind?

Who needs to look at individual program review reports? Is there feedback provided to the programs? How is this feedback provided today?

I had already started sketching some ideas - translating the ideas we were seeing to features and functionality, but after the round of user interviews I had plenty to work off of.

Ideate and Iterate

Sketching is my preferred way to get ideas down quickly. I'll often time box myself. For example, give myself 5 minutes to come up with as many ways as possible to solve a given problem. That way, I don't really have time to judge my ideas - it's useful in doing a brain dump.

I try to take a picture of sketches I think I can build off of and keep it in my sketch document as I wire and, eventually, move to high fidelity. Having the timeline of artifacts helps give context when communicating ideas to the team.

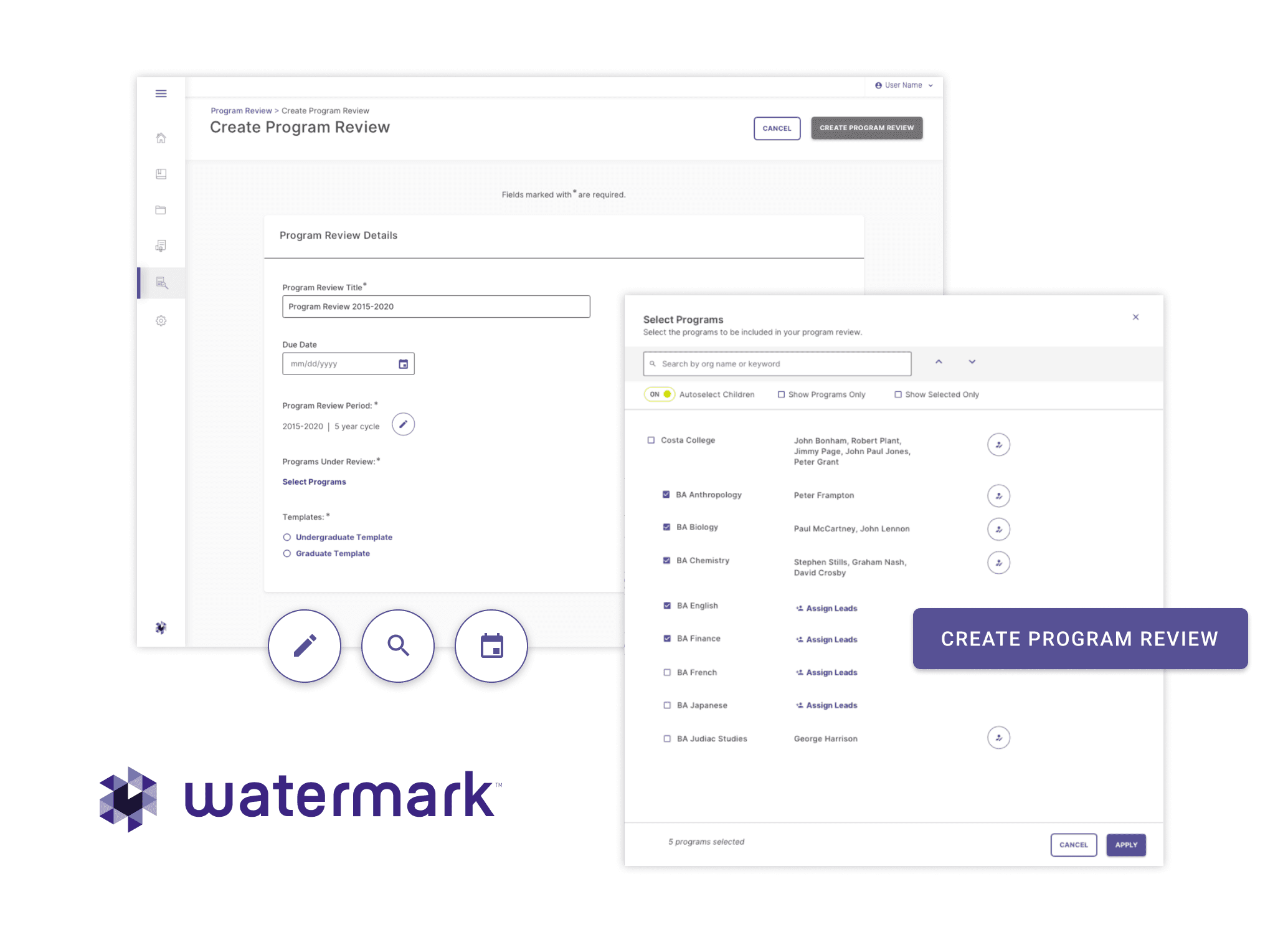

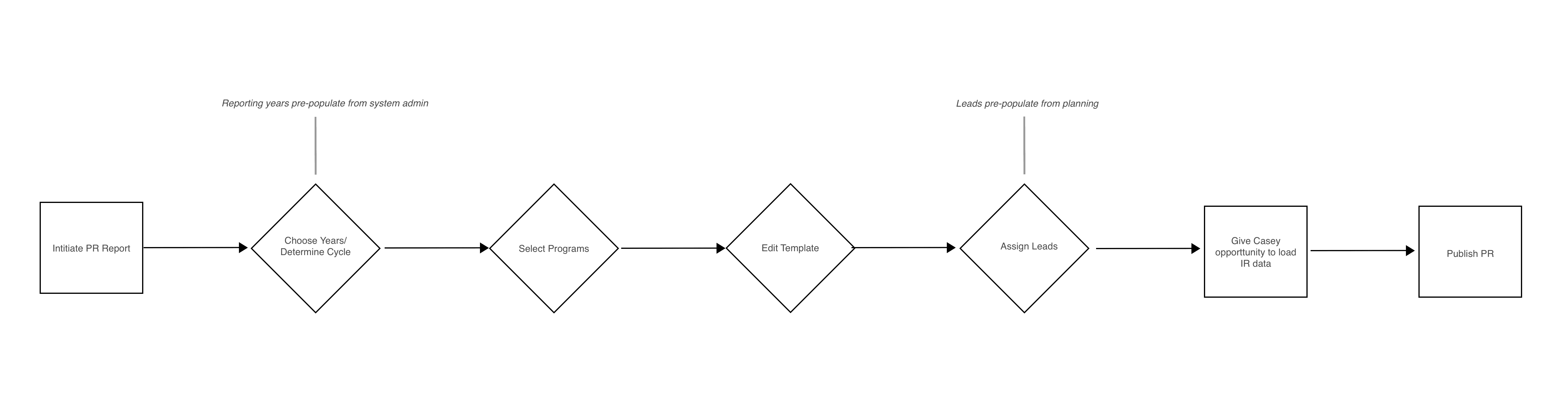

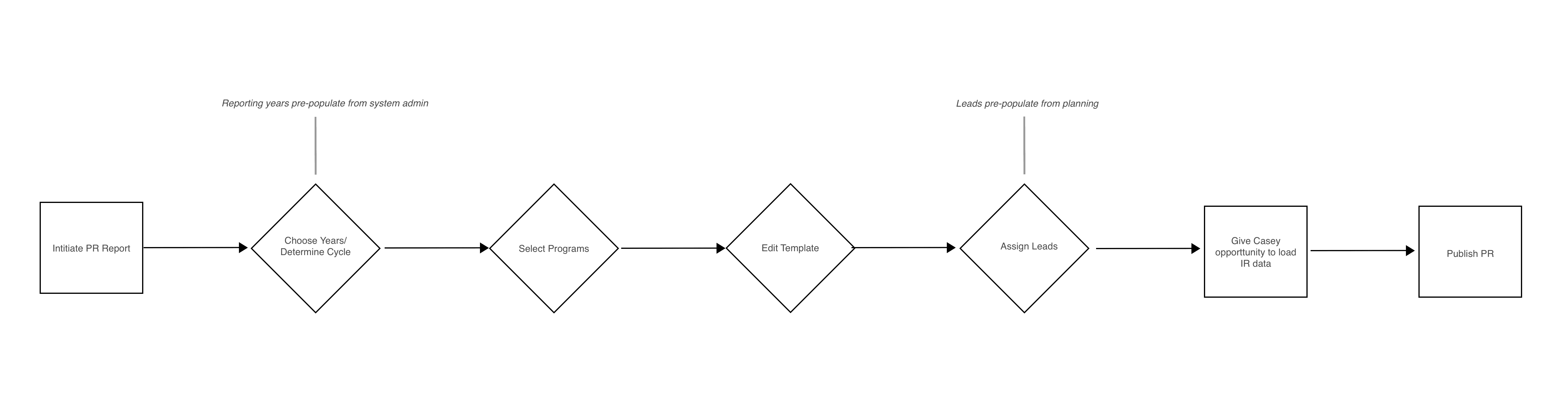

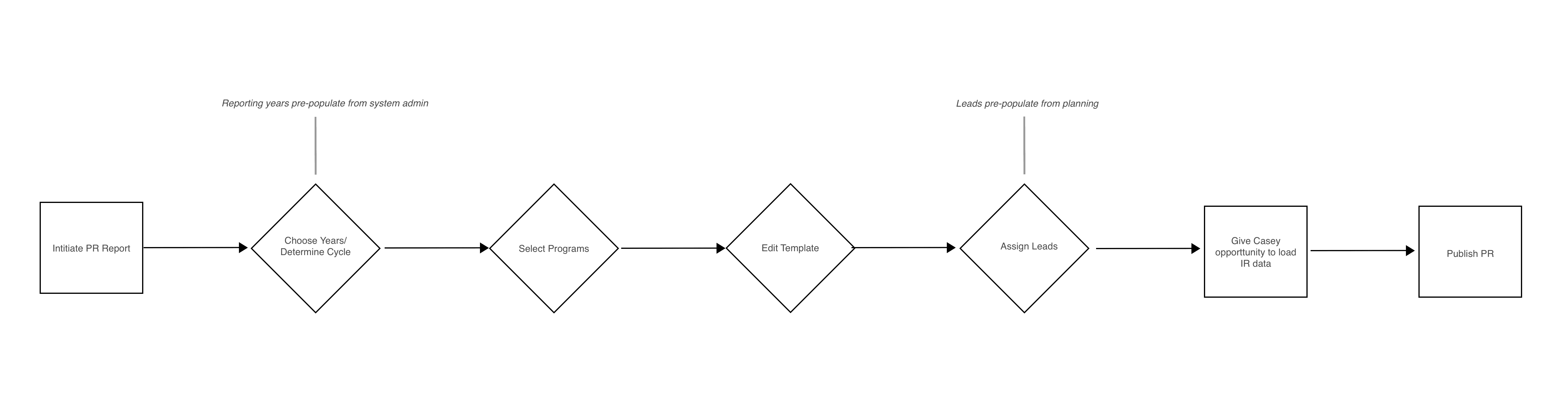

Plotting the User Flow and Wireframing

Generally, I tend to wireframe an idea after getting feedback on several sketched concepts. Starting with low-fidelity is helpful because it enables you to quickly communicate ideas. In addition, when soliciting team feedback, it focuses the discussion. For example, everyone understands we're talking about the general concept or an interaction pattern, not necessarily the visual treatment (pixel perfect spacing, color, button size, etc.)

The value of realistic content in wires has been reiterated time and time again during this project, through both user testing and soliciting team feedback.

Rapid Prototyping

Over the next several months, I collaborated with my fellow designers, product owner, development team, internal stakeholders and of course, users, to test and gradually improve the administrator and faculty program review experience.

It was a fun challenge to not only balance user and business needs, but also reconcile a work-in-progress design system while working within any technical constraints. Consistent communication between everyone - designers, developers, and product - was essential.

We worked up to a beta test and subsequent release.

User Testing: Program Review Beta

Before limited release of the product, I wrote guidelines, scheduled and led beta testing. In total, we spoke to 12 users (split between administrators and faculty) to validate our designs and continue to smooth out knots in the experience. The tests served as an awesome practice opportunity for me - there's definitely an art to framing user tasks in moderated sessions. We learned a lot about how we could improve our designs, especially regarding the program review set up flow. Themes included:

Some of the language we used is unclear i.e. "reporting years". Users saw it as the year it's due instead of years associated with the program review.

Confusion around the steps of the process - users weren't aware of the micro-save before moving on to the next step

Data integrations, specifically the program mission statement, were not clear

Results

Within 3 months of release, the Program Review module was used by 29 institutions, ranging from community colleges to private universities. We had great feedback from sales and clients themselves regarding the administrator and faculty experience. In fact, a client recently expressed how the default program review template we created not only aligned with their institution's practices, but actually improved it. Our template content inspired them to include faculty diversity as a review criterion.

During the past year and a half, this project helped me to further internalize habits of an effective design practice, such as:

There's an art to framing the scenario/task when user testing. Be careful not to use leading language.

Communicate early and often. Be on the same page as your product manager. Groom your backlog and prioritize estimation as part of the grooming process.

Developers are design partners. Include them in the process as much as you can. Loop them into discovery activities and user feedback - they're motivated by a useful, intuitive user experience too.

The importance of realistic content when presenting designs - within the team, to developers, and definitely when user testing.

The Opportunity

Program Review is a comprehensive evaluation of a program's status, effectiveness and progress. A robust program review practice is essential to retaining accreditation and ensuring the development of an institution's programs.

Institutions use a siloed, disjointed approach that leads to multiple pain points across the program review experience. Institutions are retrofitting inflexible software (such as Watermark's legacy assessment system) to fit their program review processes. Administrators have a difficult time tracking down faculty and monitoring progress. Faculty are using a number of different mediums to complete and submit their reports. These inefficiencies pose an opportunity to greatly improve the program review process by providing the following:

the ability for administrators to schedule, track and route program reviews throughout the project lifecycle

a workspace for faculty to complete the narrative report in-product

data integrations from other areas of Watermark's Education Intelligence System (EIS) to allow faculty to easily analyze and answer program review prompts

a repository for all evidence files - documents that support the narrative report

Team

Product Manager, Front-End Engineer, Back-End Engineer, Business Analyst

Role

Date

2019-2021

0 to 1

Product Design

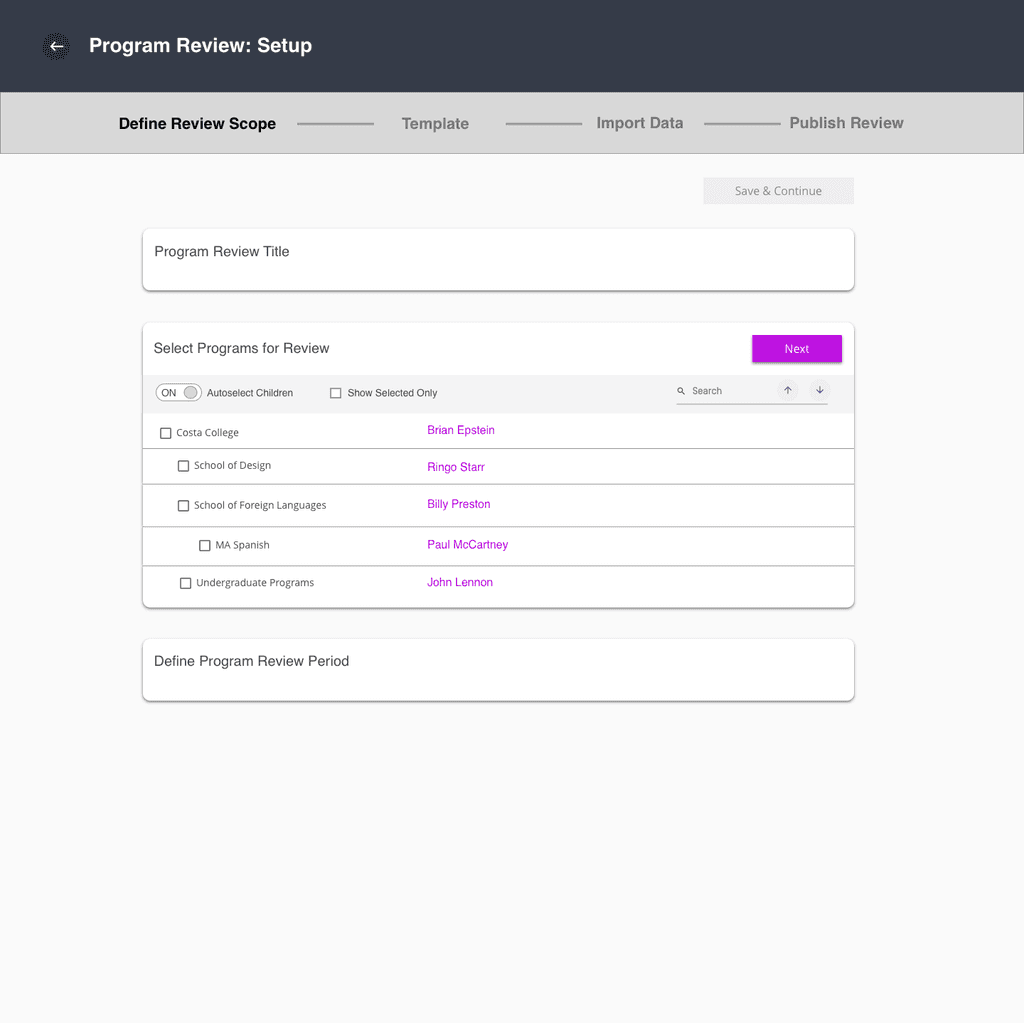

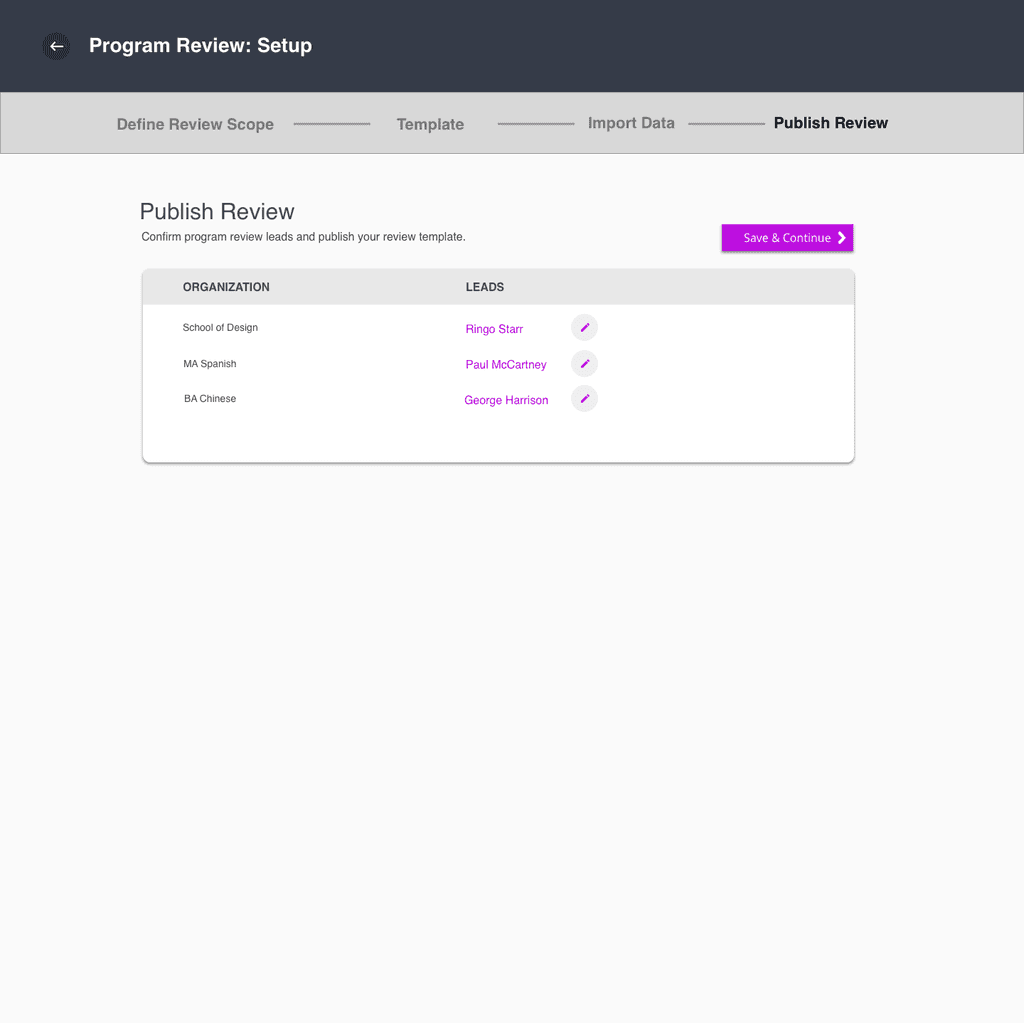

User Testing: Program Review Beta

Before limited release of the product, I wrote guidelines, scheduled and led beta testing. In total, we spoke to 12 users (split between administrators and faculty) to validate our designs and continue to smooth out knots in the experience. The tests served as an awesome practice opportunity for me - there's definitely an art to framing user tasks in moderated sessions. We learned a lot about how we could improve our designs, especially regarding the program review set up flow. Themes included:

Some of the language we used is unclear i.e. "reporting years". Users saw it as the year it's due instead of years associated with the program review.

Confusion around the steps of the process - users weren't aware of the micro-save before moving on to the next step

Data integrations, specifically the program mission statement, were not clear

I compiled a testing summary document that included test subject details, notes and quotes. Within the document, I took a pass at prioritizing next steps based on user feedback we saw during testing. Overall, most of the action items were "quick fixes" - the logic of our design was sound thanks to iteration, collaboration and consistent solicitation of feedback (always trust the process!).

Below is a high-fidelity prototype of the improved program review set up flow, incorporating feedback from the beta tests.

User Interviews

My Product Manager and I conducted additional user interviews based on the assumptions and questions generated during our workshop. We interviewed 4 administrators from 4 different institutions. It was important to talk with users from a variety of institutions - we wanted to make sure we're solving for as many use cases as we can. Some example questions included:

Describe your program review process. What is the timeline? Who is involved?

What are your pain points for your program review process? Are there any challenges you have that come to mind?

Who needs to look at individual program review reports? Is there feedback provided to the programs? How is this feedback provided today?

I had already started sketching some ideas - translating the ideas we were seeing to features and functionality, but after the round of user interviews I had plenty to work off of.

Results

Within 3 months of release, the Program Review module was used by 29 institutions, ranging from community colleges to private universities. We had great feedback from sales and clients themselves regarding the administrator and faculty experience. In fact, a client recently expressed how the default program review template we created not only aligned with their institution's practices, but actually improved it. Our template content inspired them to include faculty diversity as a review criterion.

During the past year and a half, this project helped me to further internalize habits of an effective design practice, such as:

There's an art to framing the scenario/task when user testing. Be careful not to use leading language.

Communicate early and often. Be on the same page as your product manager. Groom your backlog and prioritize estimation as part of the grooming process.

Developers are design partners. Include them in the process as much as you can. Loop them into discovery activities and user feedback - they're motivated by a useful, intuitive user experience too.

The importance of realistic content when presenting designs - within the team, to developers, and definitely when user testing.

Plotting the User Flow and Wireframing

Generally, I tend to wireframe an idea after getting feedback on several sketched concepts. Starting with low-fidelity is helpful because it enables you to quickly communicate ideas. In addition, when soliciting team feedback, it focuses the discussion. For example, everyone understands we're talking about the general concept or an interaction pattern, not necessarily the visual treatment (pixel perfect spacing, color, button size, etc.)

The value of realistic content in wires has been reiterated time and time again during this project, through both user testing and soliciting team feedback.

Ideate and Iterate

Sketching is my preferred way to get ideas down quickly. I'll often time box myself. For example, give myself 5 minutes to come up with as many ways as possible to solve a given problem. That way, I don't really have time to judge my ideas - it's useful in doing a brain dump.

I try to take a picture of sketches I think I can build off of and keep it in my sketch document as I wire and, eventually, move to high fidelity. Having the timeline of artifacts helps give context when communicating ideas to the team.

Mapping the User Journey

Using what we learned from the preliminary user interviews, we mapped out the journey of the administrator and contributor to get a comprehensive understanding of what their program review experience currently looks like, respectively. Visualizing the journey is helpful to establish understanding among project stakeholders as well as tease out further questions/assumptions.

Understanding Relevant Personas

The team kicked off the project with a 3 day workshop focused on mapping out the program review process and cultivating empathy for the users involved. Some preliminary user interviews had already been conducted (the project had been started then stopped due to business constraints before I joined the team). From those, we knew we were designing for two main user groups - an "administrator", those who set up and project manage the process and "contributor", faculty who actually write the narrative report.

Based on the user interviews, we drew up empathy maps to understand how their thoughts, feelings, and motivations relate to the program review process specifically.

Rapid Prototyping

Over the next several months, I collaborated with my fellow designers, product owner, development team, internal stakeholders and of course, users, to test and gradually improve the administrator and faculty program review experience.

It was a fun challenge to not only balance user and business needs, but also reconcile a work-in-progress design system while working within any technical constraints. Consistent communication between everyone - designers, developers, and product - was essential.

We worked up to a beta test and subsequent release.

The Opportunity

Program Review is a comprehensive evaluation of a program's status, effectiveness and progress. A robust program review practice is essential to retaining accreditation and ensuring the development of an institution's programs.

Institutions use a siloed, disjointed approach that leads to multiple pain points across the program review experience. Institutions are retrofitting inflexible software (such as Watermark's legacy assessment system) to fit their program review processes. Administrators have a difficult time tracking down faculty and monitoring progress. Faculty are using a number of different mediums to complete and submit their reports. These inefficiencies pose an opportunity to greatly improve the program review process by providing the following:

the ability for administrators to schedule, track and route program reviews throughout the project lifecycle

a workspace for faculty to complete the narrative report in-product

data integrations from other areas of Watermark's Education Intelligence System (EIS) to allow faculty to easily analyze and answer program review prompts

a repository for all evidence files - documents that support the narrative report

Team

Product Manager, Front-End Engineer, Back-End Engineer, Business Analyst

Role

Date

2019-2021

0 to 1

Product Design

Understanding Relevant Personas

The team kicked off the project with a 3 day workshop focused on mapping out the program review process and cultivating empathy for the users involved. Some preliminary user interviews had already been conducted (the project had been started then stopped due to business constraints before I joined the team). From those, we knew we were designing for two main user groups - an "administrator", those who set up and project manage the process and "contributor", faculty who actually write the narrative report.

Based on the user interviews, we drew up empathy maps to understand how their thoughts, feelings, and motivations relate to the program review process specifically.

Mapping the User Journey

Using what we learned from the preliminary user interviews, we mapped out the journey of the administrator and contributor to get a comprehensive understanding of what their program review experience currently looks like, respectively. Visualizing the journey is helpful to establish understanding among project stakeholders as well as tease out further questions/assumptions.

We expanded the map to include environmental and external factors. We noted the perceived pain points that the current program review process yields. This served as a great launching point - we had an idea of what themes we could delve into as we continued user interviews:

communication between administrators and contributors - those that actually write the report

contributors are not feeling empowered to write an insightful report via lack of access to data

Plotting the User Flow and Wireframing

Generally, I tend to wireframe an idea after getting feedback on several sketched concepts. Starting with low-fidelity is helpful because it enables you to quickly communicate ideas. In addition, when soliciting team feedback, it focuses the discussion. For example, everyone understands we're talking about the general concept or an interaction pattern, not necessarily the visual treatment (pixel perfect spacing, color, button size, etc.)

The value of realistic content in wires has been reiterated time and time again during this project, through both user testing and soliciting team feedback.

Rapid Prototyping

Over the next several months, I collaborated with my fellow designers, product owner, development team, internal stakeholders and of course, users, to test and gradually improve the administrator and faculty program review experience.

It was a fun challenge to not only balance user and business needs, but also reconcile a work-in-progress design system while working within any technical constraints. Consistent communication between everyone - designers, developers, and product - was essential.

We worked up to a beta test and subsequent release.

User Testing: Program Review Beta

Before limited release of the product, I wrote guidelines, scheduled and led beta testing. In total, we spoke to 12 users (split between administrators and faculty) to validate our designs and continue to smooth out knots in the experience. The tests served as an awesome practice opportunity for me - there's definitely an art to framing user tasks in moderated sessions. We learned a lot about how we could improve our designs, especially regarding the program review set up flow. Themes included:

Some of the language we used is unclear i.e. "reporting years". Users saw it as the year it's due instead of years associated with the program review.

Confusion around the steps of the process - users weren't aware of the micro-save before moving on to the next step

Data integrations, specifically the program mission statement, were not clear

I compiled a testing summary document that included test subject details, notes and quotes. Within the document, I took a pass at prioritizing next steps based on user feedback we saw during testing. Overall, most of the action items were "quick fixes" - the logic of our design was sound thanks to iteration, collaboration and consistent solicitation of feedback (always trust the process!).

Below is a high-fidelity prototype of the improved program review set up flow, incorporating feedback from the beta tests.

Results

Within 3 months of release, the Program Review module was used by 29 institutions, ranging from community colleges to private universities. We had great feedback from sales and clients themselves regarding the administrator and faculty experience. In fact, a client recently expressed how the default program review template we created not only aligned with their institution's practices, but actually improved it. Our template content inspired them to include faculty diversity as a review criterion.

During the past year and a half, this project helped me to further internalize habits of an effective design practice, such as:

There's an art to framing the scenario/task when user testing. Be careful not to use leading language.

Communicate early and often. Be on the same page as your product manager. Groom your backlog and prioritize estimation as part of the grooming process.

Developers are design partners. Include them in the process as much as you can. Loop them into discovery activities and user feedback - they're motivated by a useful, intuitive user experience too.

The importance of realistic content when presenting designs - within the team, to developers, and definitely when user testing.